Real-time UR10e following a tracked object

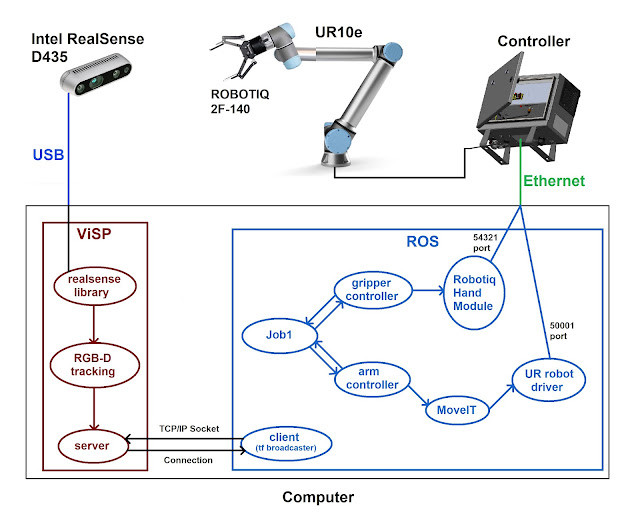

For a first trial, it was developed this demonstration of a real-time followed object that is being tracked using one RGB-D camera (Intel RealSense D435).

- As seen in this previous post, ViSP is used to process the images acquired by the RealSense RGB-D camera and to continuously track the object.

- A TCP/IP Socket Connection is established (inside the same computer) between ViSP and ROS. This socket communication is responsible for bringing the geometric transformation between the camera and the object to the ROS environment. This previous post describes with more detailed information this connection.

- Besides the transformation between the camera and the object, it is also required to know the transformation between the robot and the camera. For the robot to understand the position and orientation of the object in reference to the robot himself, this transformation becomes crucial. For getting it, I performed a manual calibration, as described in this previous post.

- Finally, for controlling the UR10e collaborative robot, I installed the external control URCap (see this post), and used MoveIt to command the robot arm, just like described in this previous post.

The final result can be seen in this video:

This is an important achieved milestone since it compiles several issues that were tackled in the past weeks.

Comments

Post a Comment