Object Tracking using ViSP in ROS - 1st demo

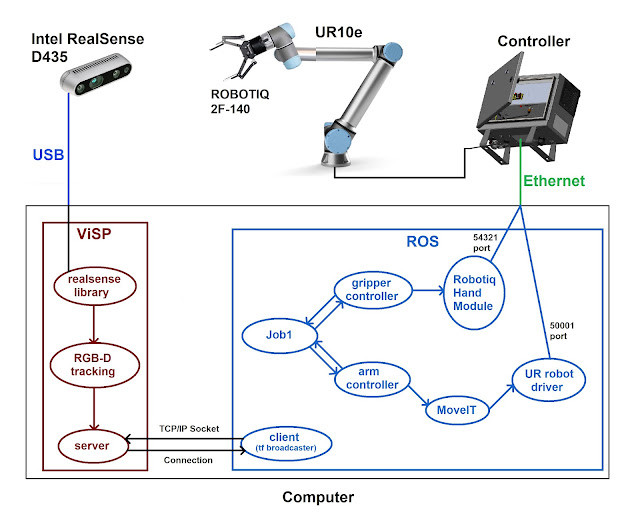

At this point, the goal is to achieve the same object tracking tool (using RGB and Depth data) within ROS environment.

For this, I used some required packages to use ViSP platform in ROS; an RGB-D camera (Intel RealSense D435i), and a woodblock as the object to be tracked.

The RGB-D tracking already achieved of this same woodblock is described in one of my previous posts.

Having all the required packages installed, I created a new package where the RGB-D camera is launched and then a tracking node will be responsible for reading the camera images and track the woodblock. The tracking node will, simultaneously and continuously, publish the woodblock position and orientation in reference to the camera frame.

We can see in this next video the result:

As it's possible to see, there are two axis system: the optical_camera and the wood_block. The wood_block link is continuously extracted by the tracking node (ViSP_node) in reference to the optical_camera link.

Analyzing the node graph, we can see that the tracking node (ViSP_node) is created to:

- subscribe the /camera/color/image_raw topic

- use this image data to track the woodblock

- publish, in real time, the woodblock pose (in reference to the camera link)

The TF tree, as usual, provides useful information to a better understanding:

As we can see,

- /camera/realsense2_camera_manager publishes the camera_aligned_depth_to_color_frame link pose in reference to the camera_link link

- ViSP_node publishes the wood_block link pose in reference to the camera_color_optical_frame link

- All the remaining transformations are being published by the robot_state_publisher

Comments

Post a Comment